Moving the Goalpost: How Custom Evaluation Metrics Improve Performance in Sports Betting

Table of Contents

- Introduction

- So What is an Evaluation Metric?

- How Does That Work in WolfTicketsAI?

- Creating a Custom Metric - Profitability

- Evaluating Performance of Profit vs Accuracy

- Accuracy By Confidence Score

- Exploring Actual Returns

- What Does This Mean for Me(the bettor)?

- UFC 288 Predictions

- What’s Next?

- PS ChatGPT Teaser

Introduction

For the last few years I’ve been working on building models that look to predict the outcomes of UFC fights to make profitable bets. This started as a way to learn the fundamentals of machine learning around a problem that was fun and few people focus on(sorry baseball). Throughout this process I’ve encountered a ton of promising leads that always reveal another problem, perhaps in the future this avenue will also turn out to be one, but a few other examples are:

- Feeding career stats data that is only accurate today, to fights years ago. Performance in testing was phenomenal, reaching over 85% accuracy, probably should have keyed me into a mistake.

- Using elaborately complex processes to curate features that make a model over fit to past performance.

- Trying to create a more balanced dataset for the UFC, specifically swapping the fighter’s corners to balance out red and blue chances. Matchmaking in the real world is still imbalanced so this just made things worse.

- Using a custom threshold for whether a prediction was for one fighter or another, this works but requires a ton of continual monitoring and adjusting as was just too brittle to recommend long term.

Now, WolfTicketsAI operates a model for the first time that was not optimized for prediction accuracy, instead focusing on profitability from single bets(don’t worry, the other models are still there). To do this I created an evaluation metric that targets profitability specifically, this article digs into the mechanics of how it works, and the performance gains from doing so.

Thanks to Dan aka /u/FlyingTriangle for the idea to dig into optimizing for profitability, his prodding kicked off this entire branch of work.

So What is an Evaluation Metric?

An Evaluation Metric is a quantitive measure used to assess how well a machine learning model is performing. This can have an impact on its training, optimization, and performance when in use. For WolfTicketsAI, we want to focus on how well a model is suited for helping us make better betting decisions on the outcomes of UFC fights. A traditional metric would be accuracy, a common default with lots of applicability to real world problems.

How Does That Work in WolfTicketsAI?

Until now, WolfTicketsAI operated 1 or 2 models, each are binary classification models. They look at the statistic differences over a range of information for each fighter, then they predict the probability of the red corner fighter winning.

All production models prior have used accuracy as their evaluation metric. Accuracy is the percentage of correct predictions made out of all the total predictions made. More specifically for WolfTicketsAI that would be how often they predict the red corner to win, and how often they do, as well as how often they predict a loss, and the red corner loses, all relative to the total number of predictions.

A few others are precision, recall, F1 Score, and Area Under the Receiver Operating Characteristic Curve(AUC-ROC). Wikipedia is a great place to dig more into each of them.

The issue with using this approach, while common is that we aren’t just trying to predict the outcome of a fight accurately, we are trying to place profitable wagers on fights. Those two prediction goals are slightly different, and so that leaves accuracy as a proxy metric for profitability.

Creating a Custom Metric - Profitability

Defining the metric as profitability guides us to look at the odds for both the red and blue corner fighters as well as all the other statistical information and the actual result when training.

A simplified example of our dataset looks like:

| result | fighter1 | fighter2 | strikes_per_minute | winning_percentage | fighter1_odds | fighter2_odds |

|---|---|---|---|---|---|---|

| 0 | Jon Jones | Cyril Gane | 24 | 10 | 1.57 | 3.0 |

| 1 | Chito Vera | Brandon Moreno | 17 | 54 | 2.5 | 1.53 |

| 0 | Robert Whittaker | Jarod Cannonier | 27 | 23 | 1.23 | 3.5 |

The odds were specified in decimal format to make it easier to determine the returns on a specific bet.

The actual returns are needed in order to model profitability, so the next step is to covert each odds field into profit, and to update the values to the correct amount the bet would returnn IF the bet was successful. In this case, we specify the same bet volume at all times, 10 dollars. After doing so, the example is:

| result | fighter1 | fighter2 | strikes_per_minute | winning_percentage | fighter_1_profit | fighter_2_profit |

|---|---|---|---|---|---|---|

| 0 | Jon Jones | Cyril Gane | 24 | 10 | 15.70 | 30.00 |

| 1 | Chito Vera | Brandon Moreno | 17 | 54 | 25.00 | 15.30 |

| 0 | Robert Whittaker | Jarod Cannonier | 27 | 23 | 12.30 | 35.00 |

With these values we can guide our model with a custom metric based on profitability as it will look at: result, fighter_1_profit, and fighter_2_profit.

The key for making this work is to make a metric for profit, and to correctly raise or lower it based on a prediction’s outcome. If the model predicts Jon Jones above, profit needs to rise by $15.70, however if Jon had lost that fight, the profit needs to fall by $10.00.

The code for doing this is:

1import numpy as np2

3BET_VALUE = 10.004

5def profit_score(y_true, y_pred_proba, fighter_1_profit, fighter_2_profit):6 # Change profitability to a win or loss.7 y_pred = np.round(y_pred_proba)8

9 # Start the profit index at 010 profit = 011 for true, pred, f1p, f2p in zip(y_true, y_pred, fighter_1_profit, fighter_2_profit):12 if true == pred:13 # Use fighter_1_profit as the amount if fighter1 was predicted and correct14 if pred == 1:15 profit += f1p16 # Use fighter_2_profit as the amount if fighter2 was predicted and correct17 elif pred == 2:18 profit += f2p19 # Reduce profits by $10.00 if the prediction was incorrect20 else:21 profit -= BET_VALUE22 return profitFrom here the implementation details are specific to your machine learning framework but it will end up being passed to your training function, similar to this:

1predictor = ClassificationModel(label='result',2 eval_metric=custom_profit_scorer).fit(train_data)Executing with a custom evaluation metric will allow the training process to bias its learnings towards your specified outcome, not just accuracy.

Evaluating Performance of Profit vs Accuracy

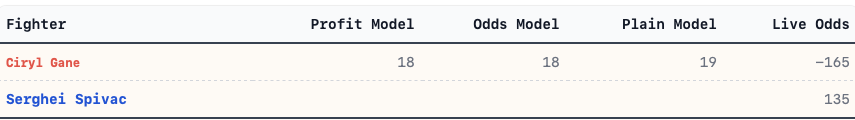

Prior to the Profit model, the most accurate approach was using a model that also factored in the current odds of the fight when the prediction was made. The content below explores the performance of that model vs the new profit oriented one.

Accuracy By Confidence Score

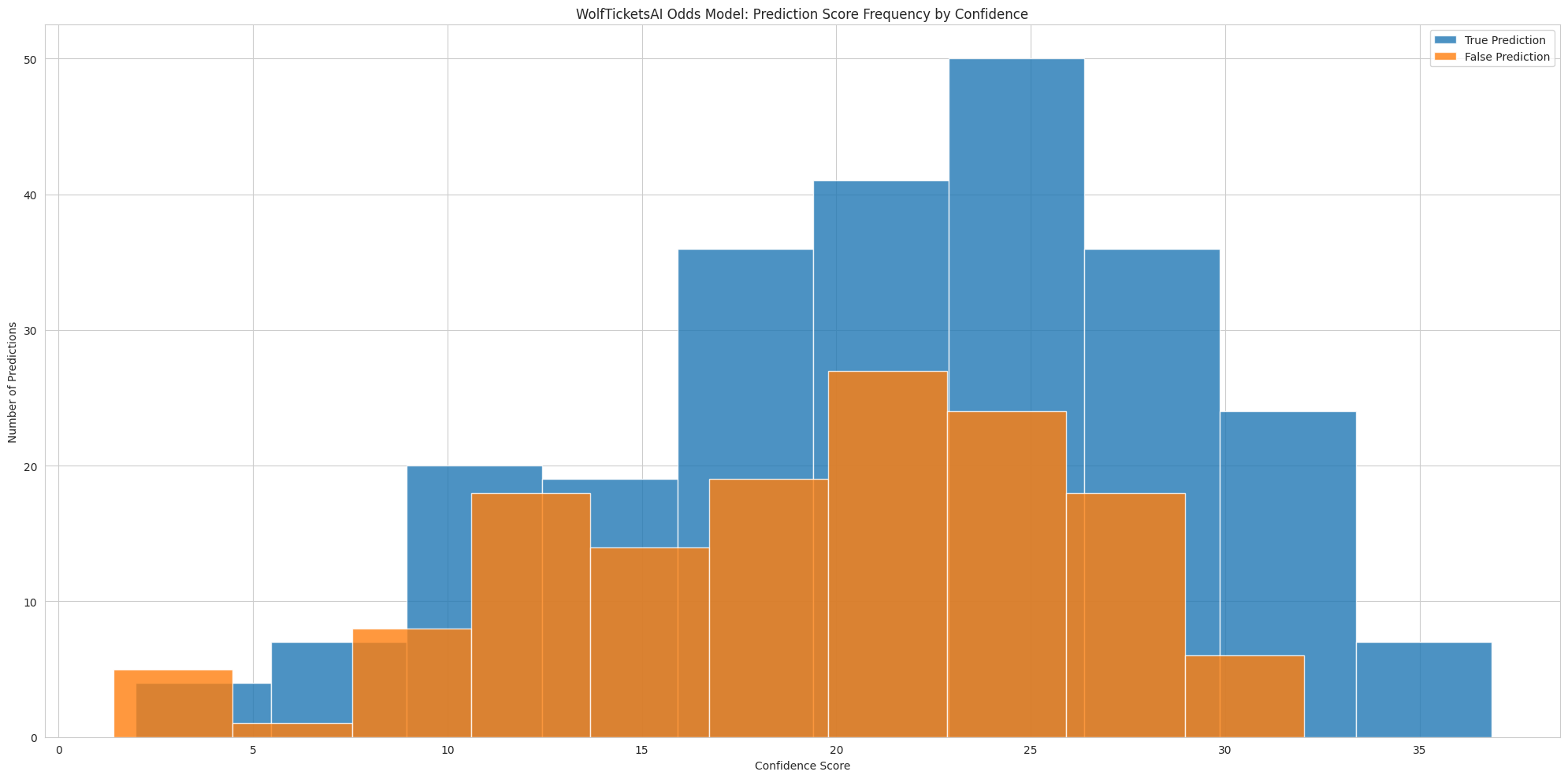

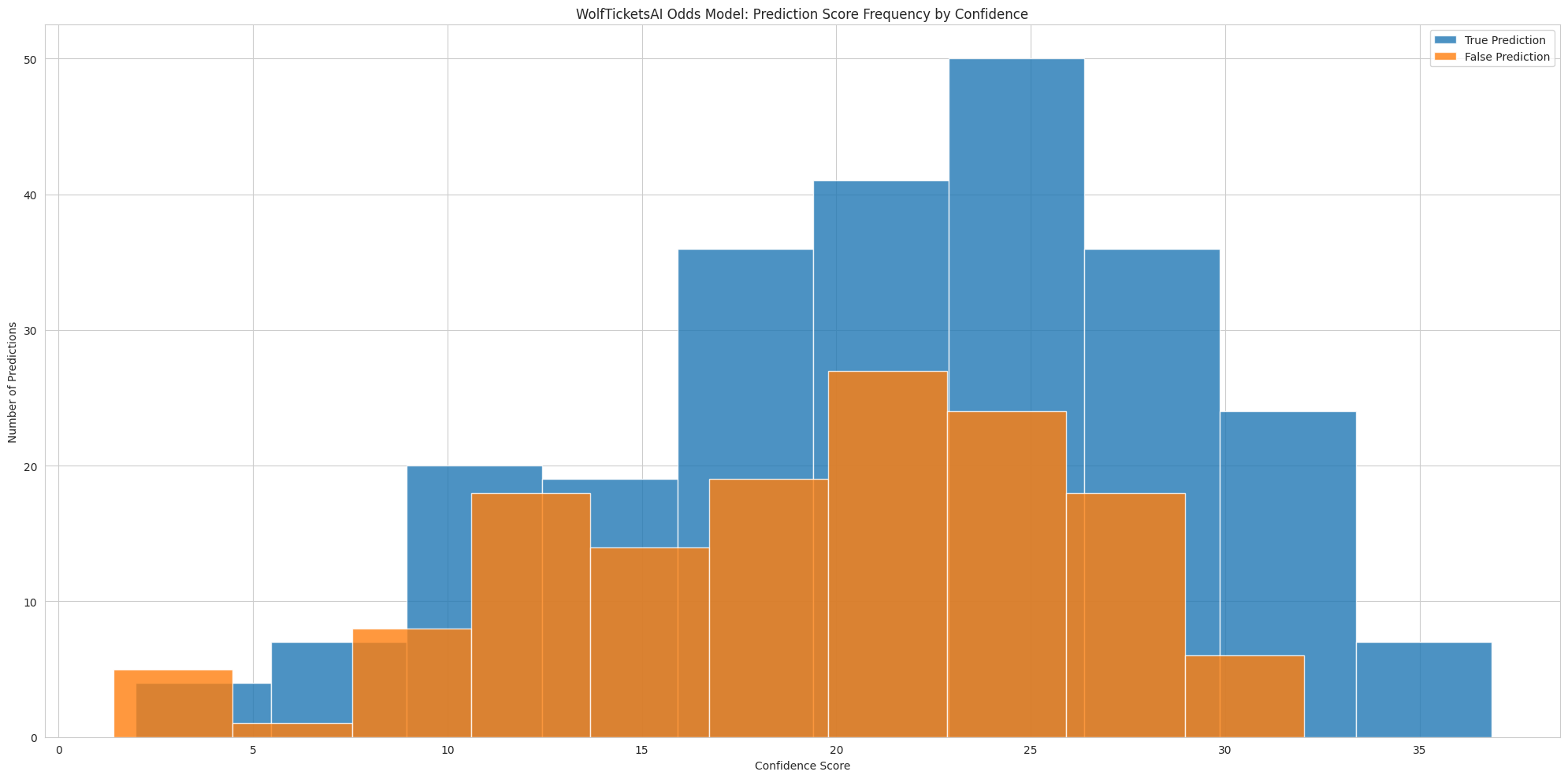

Take a look first at the WolfTicketsAI Odds Model for 2022:

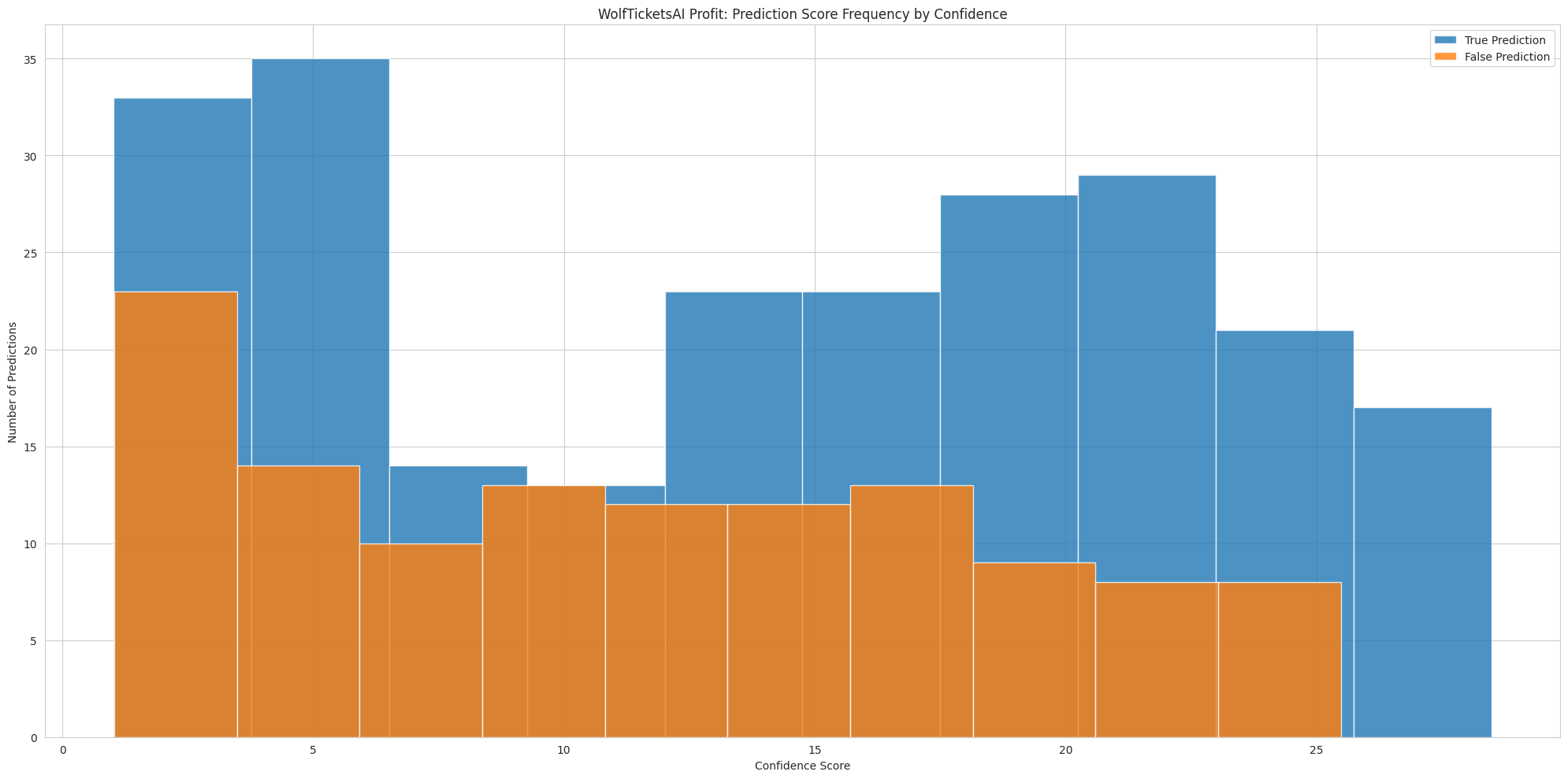

Compare that to our new profit focused model for 2022:

The Odds model shows a normalish distribution of predictions around the 20-30 confidence score range, this is not replicated in the Profit model, but we see that the performance is more accurate for every confidence score range except 10, where it actually just ties. Our first indication that we might be onto something useful.

Exploring Actual Returns

Accuracy is nice but what drives the return is the outcome from bets winning, and that is what this entire exercise has been about, so now let’s review those.

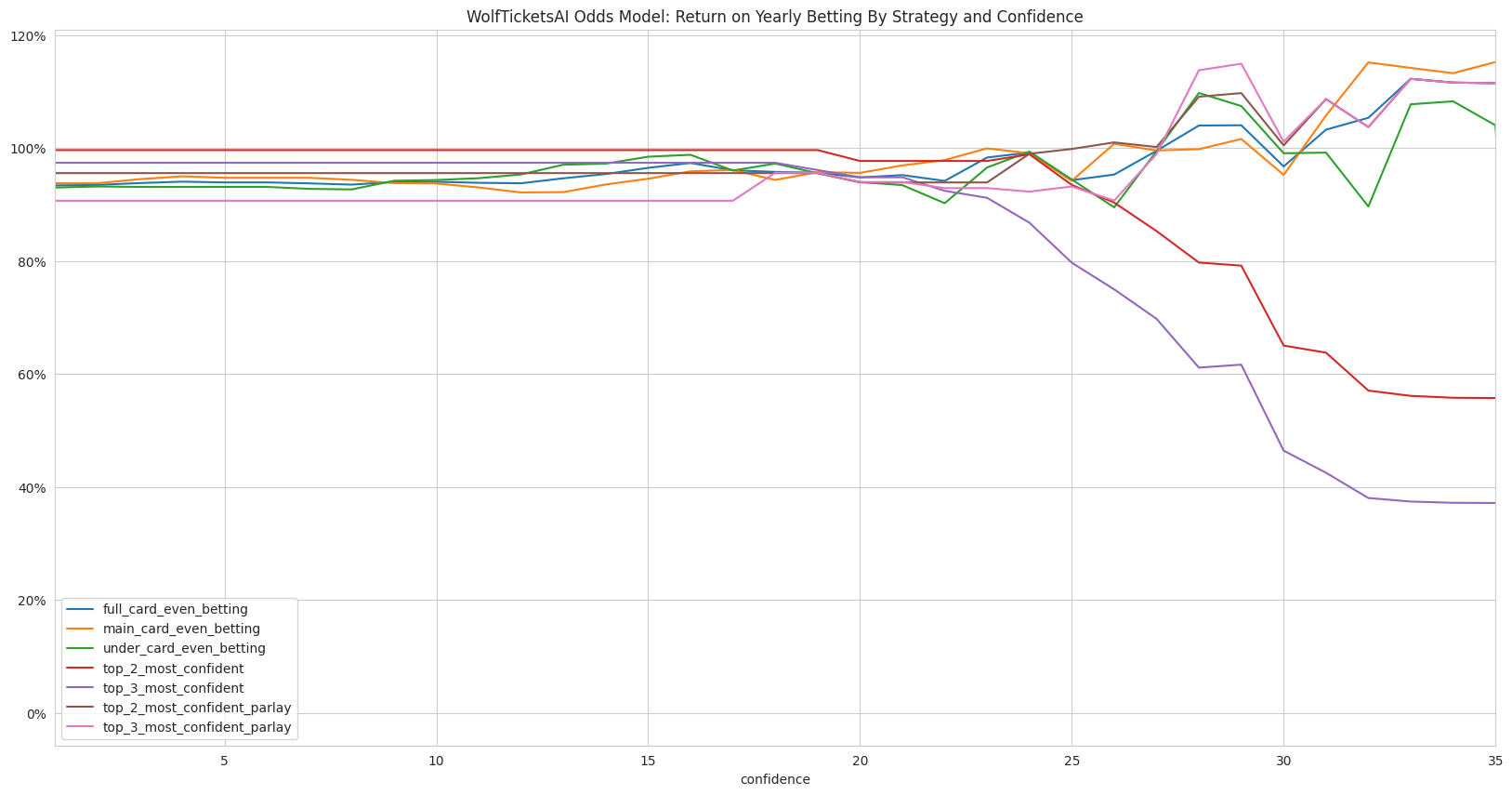

First the Odds based model for 2022:

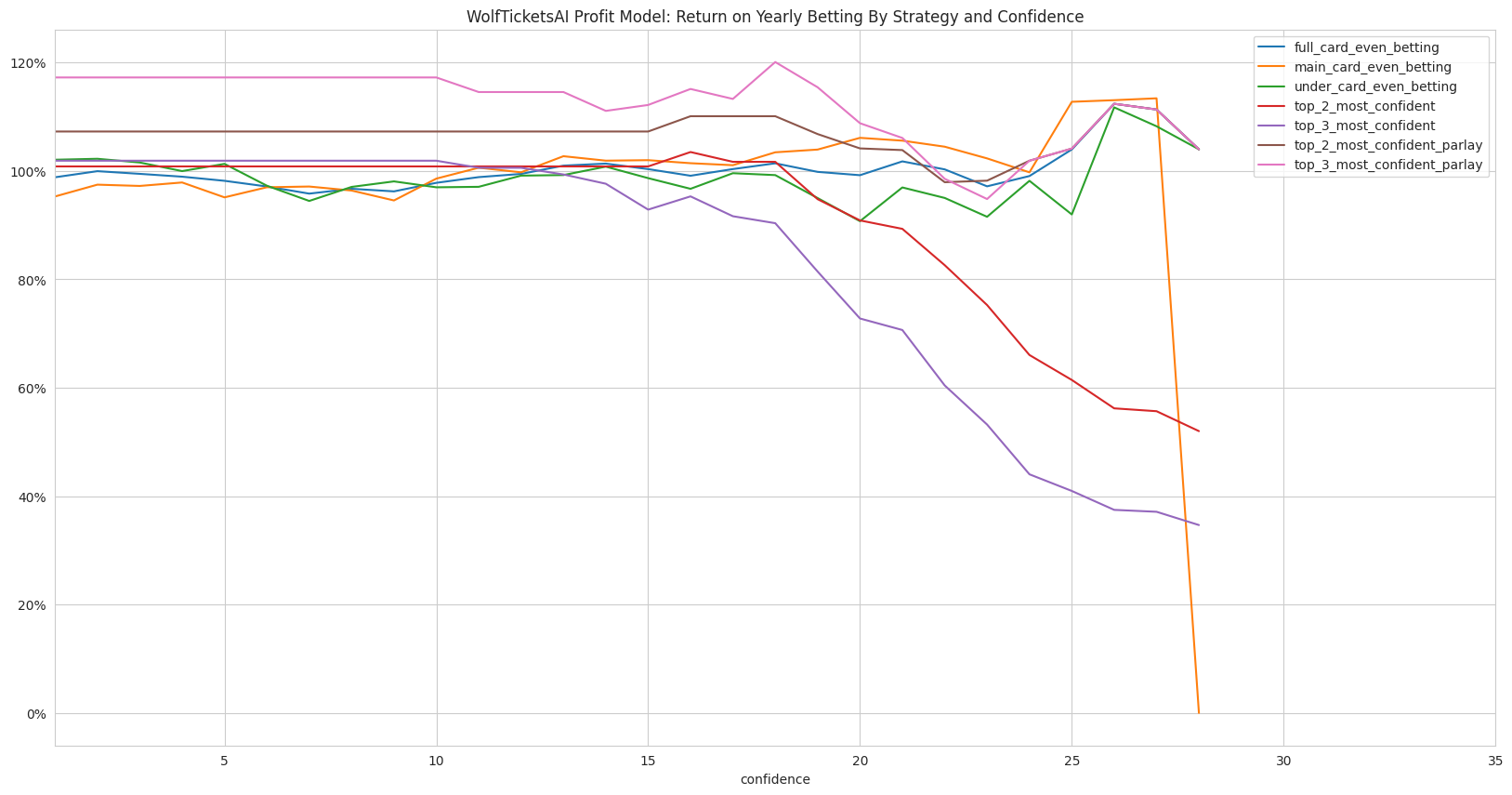

Now the Profit focused model for 2022:

It looks like all the effort was worth it! A winning strategy for the top 3 most confident predictions parlays yields nearly a +20% return for the year for many intervals, and touches it right before a confidence score of 20! Compared to the Odds model which only arrived there after even betting and adjusting a confidence score to nearly 35!

If you’d like to explore more of the results on your own you can download the full dataset here:WolfTicketsAI 2022 Predictions.

What Does This Mean for Me(the bettor)?

The reduction in the confidence score needed to reach a profitable outcome is important as it allows you to place more wagers(of smaller volumes) and to reduce the chance of each to have an outsized impact on your total returns.

Having more options that payout evenly or above even at lower scores increases the chance that you as a user can leverage the predictions successfully into your strategies.

UFC 288 Predictions

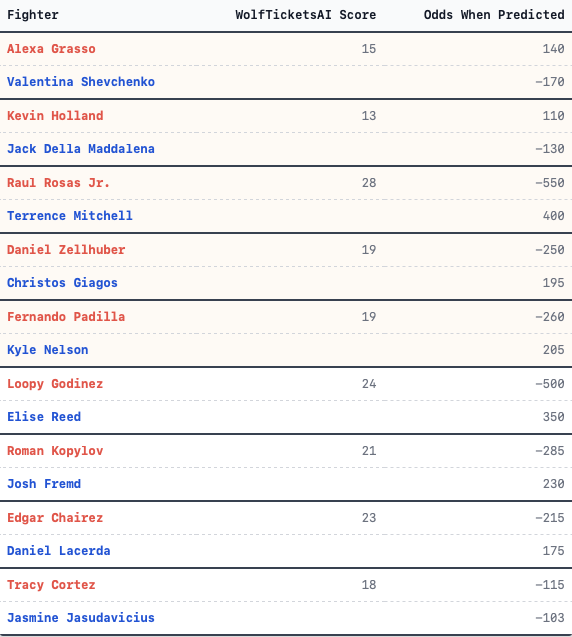

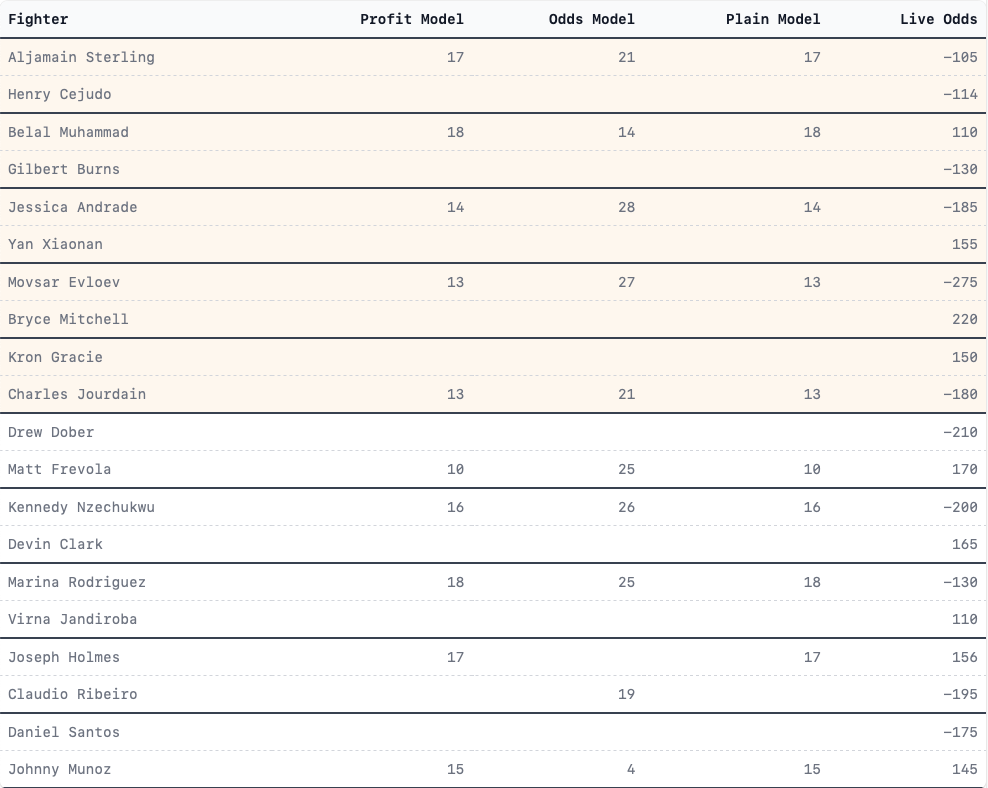

This weekend, we have UFC 288: Sterling vs. Cejudo and if you’re a member of WolfTickets.AI already you can view all the predictions and their details at: WolfTickets.AI - UFC 288

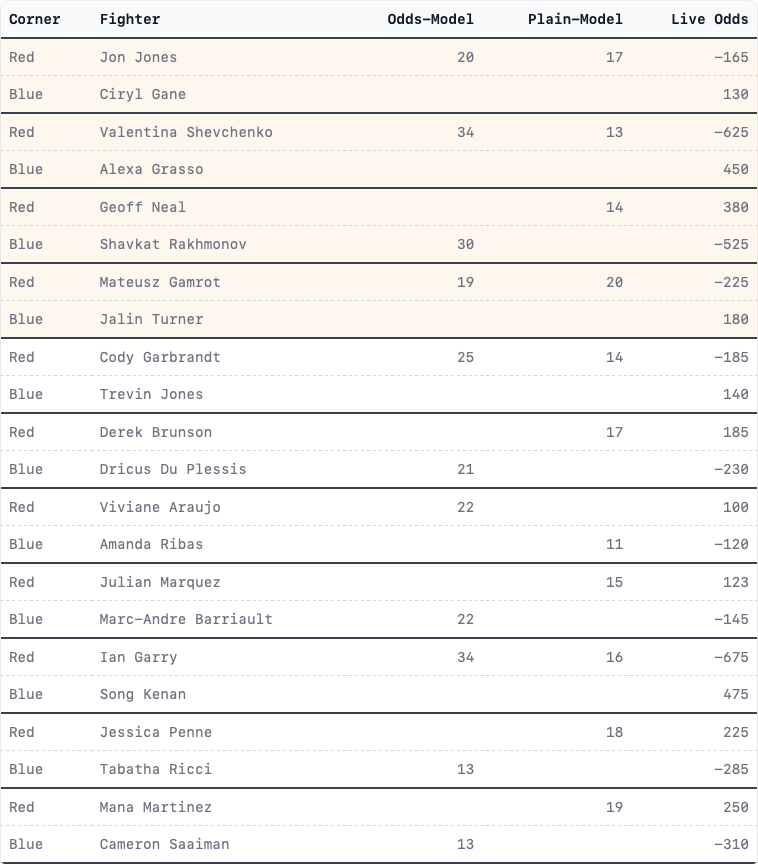

With the launch of this model, the predictions are shared here as well and they are(the orange indicates a main card fight):

What’s Next?

- A new pipeline that will execute weekly before all predictions to generate up to date features for each fighter. During this effort I noticed that the models have drifted in performance over time due to an issue in how I was continually updating, this will fix it.

- Implementing the new model into all of the tracking and reporting for accuracy each week.

- The ability to export the data for each prediction as JSON so people can explore on other systems like ChatGPTs analysis of a prediction.

- Generating new features and models based on data collected per round of a fight. This will allow for models to see more deeply into issues around cardio, the impact of damage, etc. I’m excited to see what improvements arise when these are used.

Thanks and good luck!

— Chris King

PS if you’d like to support this work and stay up to date on all the predictions, with full visibility to the stats you can sign up for WolfTickets at: https://wolftickets.ai

PS ChatGPT Teaser

Generative AI systems like ChatGPT do not always deliver factual information but the response below is what comes out at the moment if you provide the stats used by WolfTicketsAI for each fighter to ChatGPT-4 and ask it to to make a prediction.

The Prompt(JSON provided earlier):

1User2Based on the stats provided above, what is your analysis about who is likely to win in a fight between Aljamain Sterling. Use the stats provided as supporting evidence along with any facts you are aware of for the UFC's Bantamweight division.The Response:

1Based on the provided statistics and considering the fact that both fighters are in the UFC's Bantamweight division, it is challenging to predict the exact outcome of a fight between Aljamain Sterling and Henry Cejudo. However, I can provide an analysis of their strengths and weaknesses based on their stats.2

3Aljamain Sterling:4

5* Has a higher win percentage (0.8800) compared to Cejudo (0.8889)6* Has a better submission game, with a higher submission rate per fight (0.8871) than Cejudo (0.2019)7* Has a higher takedown defense ratio (1.4286) than Cejudo (0.0714)8* Lands more significant strikes per minute (4.7039) than Cejudo (3.9237)9

10Henry Cejudo:11

12* Has a higher takedown per fight rate (2.1200) compared to Sterling (1.9789)13* Has better striking accuracy (0.5399) than Sterling (0.6032)14* Has a better striking defense percentage (0.5983) compared to Sterling (0.4926)15* Has a higher recent win percentage (1.00) than Sterling (1.00)16

17It's important to note that this analysis only takes into account the statistics provided and does not consider factors such as fight style matchups, gameplans, and other external factors that may affect the outcome of the fight. Additionally, both fighters have evolved since the statistics were last updated, and their current form may not be accurately represented by these numbers.18

19In conclusion, based on the provided stats, it appears that Aljamain Sterling has an advantage in the submission and striking aspects, while Henry Cejudo has a slight edge in takedowns and striking accuracy. The fight could be very competitive, and the outcome may ultimately depend on how well each fighter can implement their gameplan and exploit the weaknesses of their opponent.Clearly there’s more to work on here but it is an interesting take on the data.