Making Weight: How a Scoring System Bolsters ML Predictions

Overview:

Machine learning models for UFC fight predictions, while powerful, often have blind spots that can impact their accuracy. This post introduces a weighted scoring system designed to address these limitations by combining ML predictions with domain expertise. The system incorporates factors such as recent weight class changes, rematch history, and the impact of previous knockouts to refine the initial ML predictions. I’ll examine the heuristics behind this approach, walk through its implementation, and demonstrate how it influences betting decisions.

The goal is to create more comprehensive fight forecasts that account for nuances often missed by pure data-driven models. This analysis aims to be valuable for those interested in UFC analytics, sports betting strategies, and the practical application of machine learning in sports prediction.

From Heuristics to Weighted Scoring

Despite years of working on predicting fights, all of the models I have ever built or operate have blindspots, the first step is to define a series of heuristics that can shore up those weaknesses. This will be a continual process, but to kick things off, here are a few that stood out as worth tackling first:

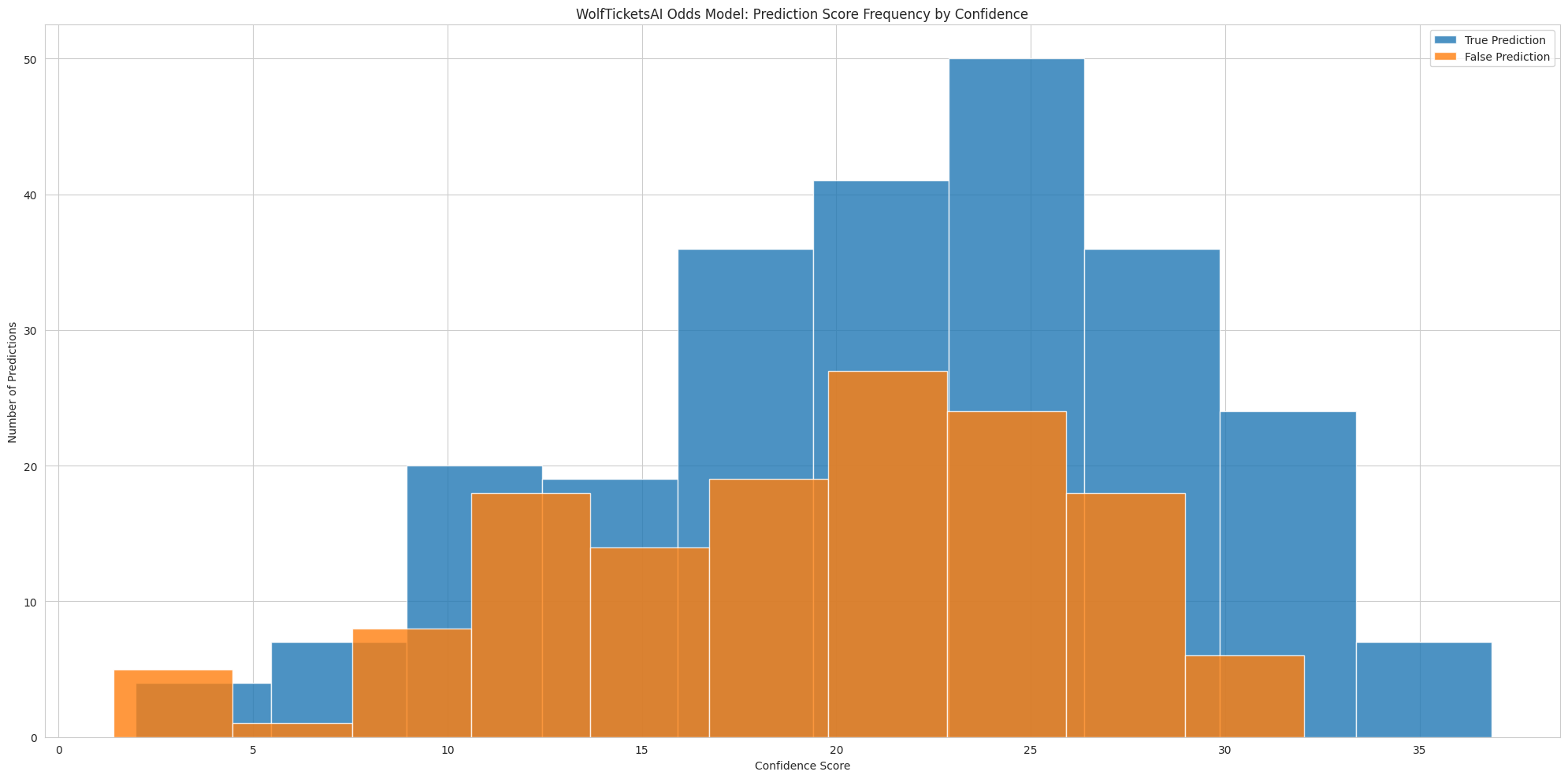

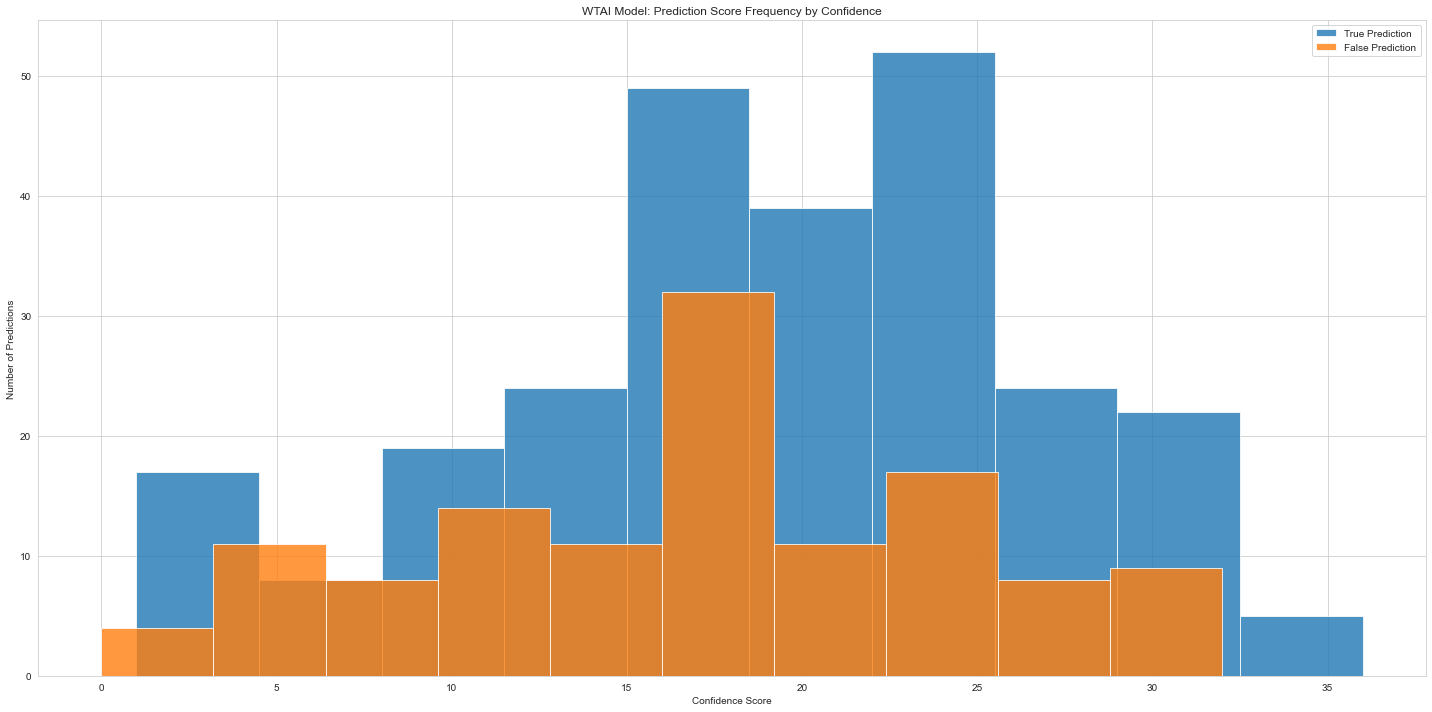

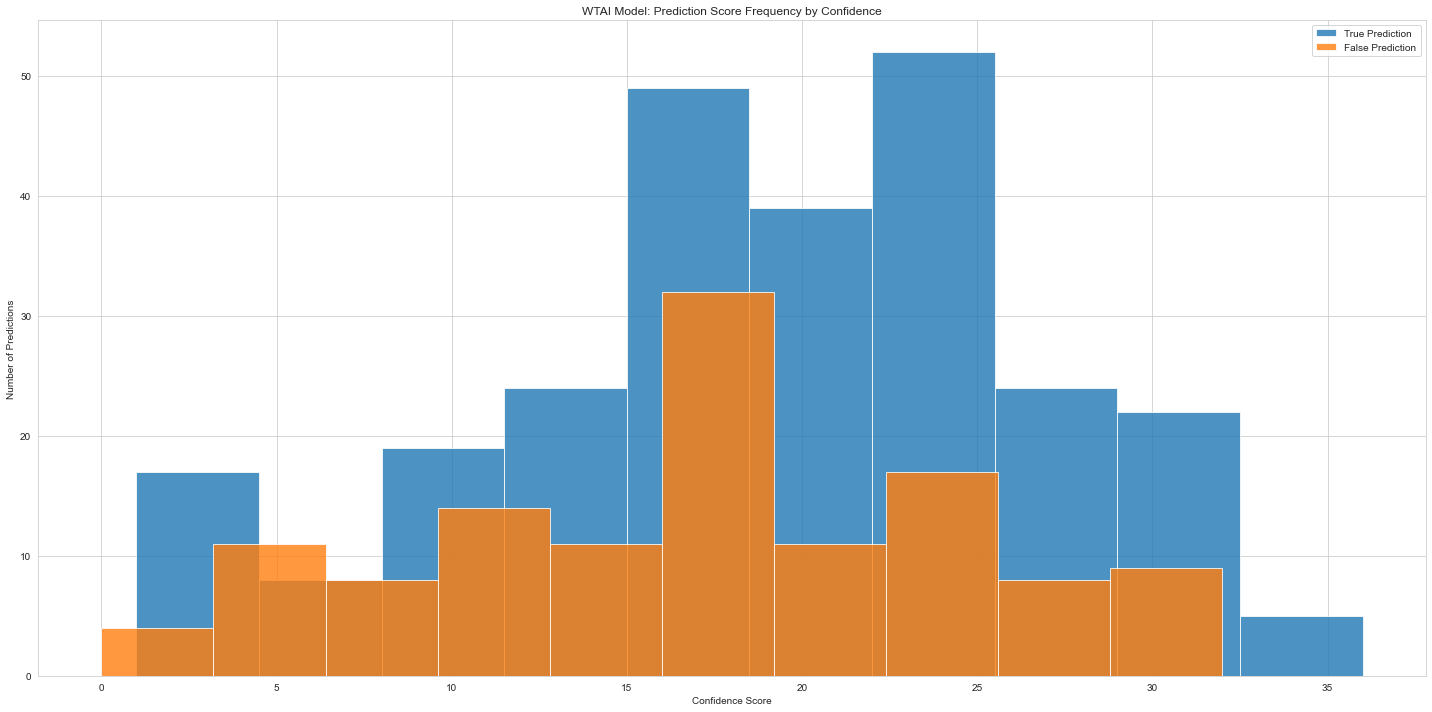

- How confident is the WTAI prediction itself ( the best range is those that start from 14 to 22 ), while 10 to 13 is also good, beyond 22 it is worth checking to see if the stats are out of normal ranges when a fighter only has a limited history.

- Have the fighter’s fought before? Is the predicted winner the previous winner, is the fight at short notice? ( Short notice fights tend to go the same direction as the original, favoring the victor).

- Has either fighter changed their weight class recently? It can take a bit for a body recomposition to fully develop, and at the very least it just provides more uncertainty.

- Head trauma seems compound over time, so have they been KO’ed in the last 12 months or have they lost after a KO within a 12 month period for their last 2 outings?

- Finally, are they on the list of banned fighters. Eventually fighter’s just need to hang it up like Vicente Luque after his brain bleed or Tony Fergusson after Gaethje.

Each of these can be used to increase or decrease the confidence in a prediction when selecting which ones to bet on. The next step is to build out a framework for systematically evaluating each prediction against these heuristics, adjusting the confidence along the way.

A Basic Implementation

Starting off, what kind of data needs to go into this system?

Well, we have to have a base prediction before anything can be weighted… then more data about the fighters… then other predictions. Putting it all together the constructor looks like this:

1class WeightedScorer(object):2 banned_fighters = [3 620, # Vicente Luque4 1368, # Tony Ferguson5 # Add more fighter IDs and names as needed ]6

7 def __init__(self, primary_prediction, predictions):8 self.fighter_1 = primary_prediction.fighter_19 self.fighter_2 = primary_prediction.fighter_210 self.primary_prediction = primary_prediction11 self.predictions = predictions12 self.base_confidence = self.primary_prediction.confidence13 self.final_confidence = self.base_confidence14 self.confidence_adjustments = []15 self.is_banned_fighter = FalseIdeally once the heuristics have been applied to a prediction, I’d like a record of all of the changes, along with a narrative that explains things which could be be useful to humans or to other AI models later.

Capturing that I wrote a really simple method that logs what’s happening when the confidence value is altered:

1def adjust_confidence(self, adjustment, reason):2 self.final_confidence *= (1 + adjustment)3 self.confidence_adjustments.append({4 "adjustment": f"{adjustment:+.1%}",5 "reason": reason,6 "new_confidence": round(self.final_confidence, 2)7 })With these building blocks in place I can now add functions for each heuristic, the first one down is to adjust the initial confidence based on how performant those ranges have been in the past.

The distribution curve of accuracy looks like this:

Note there’s a huge spike around the 25 cutoff in the graph, I may alter my weighting system in the future to more closely reflect this.

The function to shift the base scores is:

1def adjust_base_confidence(self):2 if self.base_confidence < 10:3 self.adjust_confidence(-0.3, "Base confidence < 10, decreased by 30%")4 elif 10 <= self.base_confidence < 14:5 self.adjust_confidence(0.1, "Base confidence between 10 and 13, increased by 10%")6 elif 14 <= self.base_confidence < 22:7 self.adjust_confidence(0.2, "Base confidence between 14 and 21, increased by 20%")8 elif 22 <= self.base_confidence < 27:9 self.adjust_confidence(0.05, "Base confidence between 22 and 26, increased by 5%")10 else:11 self.adjust_confidence(0, "Base confidence >= 27, no change")Not the most complex, but it will work for a first pass. The rest of the process is adding more of these functions until there is one to support all the heuristics that we have data to support.

With that done, the last component is to step through each of them, then log the outputs which looks like this:

1def generate_report(self):2 self.adjust_base_confidence()3 self.set_fighter_histories()4 self.check_rematch()5 self.adjust_for_weight_changes()6 self.adjust_for_recent_ko_tko()7 self.check_banned_fighter()8

9 report = "<prediction_report>\n"10

11 report += f" <primary_prediction>\n"12 report += f" <base_confidence>{self.base_confidence}</base_confidence>\n"13 report += f" <final_confidence>{round(self.final_confidence, 2)}</final_confidence>\n"14 report += f" <predicted_winner>{self.primary_prediction.predicted_winner.name}</predicted_winner>\n"15 report += f" <weight_class>{self.primary_prediction.weight_class}</weight_class>\n"16 if self.is_banned_fighter:17 report += f" <warning>WARNING: Predicted winner is on the banned fighters list. Do not bet on this fight.</warning>\n"18 report += f" </primary_prediction>\n"19

20 report += f" <confidence_adjustments>\n"21 for adj in self.confidence_adjustments:22 report += f" <adjustment>\n"23 report += f" <value>{adj['adjustment']}</value>\n"24 report += f" <reason>{adj['reason']}</reason>\n"25 report += f" <new_confidence>{adj['new_confidence']}</new_confidence>\n"26 report += f" </adjustment>\n"27 report += f" </confidence_adjustments>\n"28

29 report += f" <odds>\n"30 report += f" <fighter_1_odds>{self.primary_prediction.fighter_1_odds}</fighter_1_odds>\n"31 report += f" <fighter_2_odds>{self.primary_prediction.fighter_2_odds}</fighter_2_odds>\n"32 report += f" </odds>\n"33

34 for fighter in [self.fighter_1, self.fighter_2]:35 report += f" <fighter>\n"36 report += f" <name>{fighter.name}</name>\n"37 report += f" <weight_change>{self.determine_weight_change(fighter)}</weight_change>\n"38 report += f" <history>\n"39 for fight in fighter.history:40 report += f" <fight>{fight}</fight>\n"41 report += f" </history>\n"42 report += f" </fighter>\n"43

44 report += f" <additional_predictions>\n"45 for pred in self.predictions:46 if pred != self.primary_prediction:47 report += f" <prediction>\n"48 report += f" <model>{pred.mlmodel.name}</model>\n"49 report += f" <confidence>{pred.confidence:.2f}</confidence>\n"50 report += f" <predicted_winner>{pred.predicted_winner.name}</predicted_winner>\n"51 report += f" </prediction>\n"52 report += f" </additional_predictions>\n"53

54 report += "</prediction_report>"55

56 return reportFor a specific upcoming match of Santos vs Agapova this renders:

1<prediction_report>2 <primary_prediction>3 <base_confidence>20</base_confidence>4 <final_confidence>21.6</final_confidence>5 <predicted_winner>Luana Santos</predicted_winner>6 <weight_class>Women's Flyweight</weight_class>7 </primary_prediction>8 <confidence_adjustments>9 <adjustment>10 <value>+20.0%</value>11 <reason>Base confidence between 14 and 21, increased by 20%</reason>12 <new_confidence>24.0</new_confidence>13 </adjustment>14 <adjustment>15 <value>-10.0%</value>16 <reason>Predicted winner is moving down in weight</reason>17 <new_confidence>21.6</new_confidence>18 </adjustment>19 </confidence_adjustments>20 <odds>21 <fighter_1_odds>-390</fighter_1_odds>22 <fighter_2_odds>280</fighter_2_odds>23 </odds>24 <fighter>25 <name>Luana Santos</name>26 <weight_change>Moving down in weight (from Women's Bantamweight to Women's Flyweight)</weight_change>27 <history>28 <fight>December 9, 2023: Luana Santos won against Stephanie Egger. The fight ended in round 3 at 5:00. It was a unanimous decision. Additional details: 27 - 30. 28 - 29. 28 - 29.</fight>29 <fight>August 12, 2023: Luana Santos won against Juliana Miller. The fight ended in round 1 at 3:41. Method of victory: KO/TKO.</fight>30 </history>31 </fighter>32 <fighter>33 <name>Mariya Agapova</name>34 <weight_change>Staying at usual weight</weight_change>35 <history>36 <fight>September 17, 2022: Mariya Agapova lost against Gillian Robertson. The fight ended in round 2 at 2:19. Method of victory: Submission.</fight>37 <fight>March 5, 2022: Mariya Agapova lost against Maryna Moroz. The fight ended in round 2 at 3:27. Method of victory: Submission.</fight>38 <fight>October 9, 2021: Mariya Agapova won against Sabina Mazo. The fight ended in round 3 at 0:53. Method of victory: Submission.</fight>39 <fight>August 22, 2020: Mariya Agapova lost against Shana Dobson. The fight ended in round 2 at 1:38. Method of victory: KO/TKO.</fight>40 <fight>June 13, 2020: Mariya Agapova won against Hannah Cifers. The fight ended in round 1 at 2:42. Method of victory: Submission.</fight>41 </history>42 </fighter>43 <additional_predictions>44 <prediction>45 <model>plain_model</model>46 <confidence>5.00</confidence>47 <predicted_winner>Luana Santos</predicted_winner>48 </prediction>49 <prediction>50 <model>profit_model</model>51 <confidence>19.00</confidence>52 <predicted_winner>Luana Santos</predicted_winner>53 </prediction>54 </additional_predictions>55</prediction_report>There’s some good insight there; the model was reasonably confident firs and the predicted winner is going down in her weight class which can be a negative. Further seeing the outcomes, timelines, odds, and other predictions can help you frame your understanding of this matchup. With this content and the existing LLM driven write-ups for each fight, we’re ready to systematically review not just a specific prediction, but the quality of an entire card as well.

Grappling with Confidence

In 2024 the UFC has continually shown they are not going to put their best out each week for every event. Some events are notoriously awful(Perez vs Taira, Blanchfield vs Fiorot). It is critical to spot how many predictions are likely to be good to determine the number and structure of bets you should place for each event. For example on Blanchfied vs. Fiorot(https://wolftickets.ai/events/ufc-on-espn-blanchfield-vs-fiorot/6642293373758126235/) we went 4/7 for predictions over 14 in confidence, had we refined this approach to look at where the models agreed we’d be 4/5, only missing Bill Algeo.

Filtering this week’s predictions for those with a final confidence value over 14 yields:

- Bonfim predicted to win, score 21.6

- Rodriguez predicted to win, score 19.8

- Santos predicted to win, score 21.6

- Van predicted to win, score 18.0

With this information, what bets should be placed? A few approaches are:

- Bet all of them together in a parlay.

- Structure several parlays based on how confident you are in each prediction with the least confident appearing in the least number of parlays.

- Short parlays that limit exposure to a single failure by limiting a fighter’s appearance in multiple parlays.

Rather than have a fixed number of parlays like 3 to 5, I’m going to restrict it based on the conditions that a fighter can’t appear in more than 2 parlays, and they shouldn’t have a lot of overlap. There are certainly many other options.

This approach gives 2 parlays for this event, and you could shuffle them but I’ve selected:

- Gabriel Bonfim & Christian Rodriguez: 1U @ -124

- Luana Santos & Joshua Van: 1U @ -119

Good luck to anyone tailing these choices and I’ll keep at the refinement

Future Work:

A few more items will get added to the site soon, so stay tuned:

- A table that shows the final confidence for each prediction.

- Full details on the heuristic evaluation per prediction.

- A short writeup on the event as a whole beforehand.

Conclusion:

The weighted scoring system presented here represents a step forward in refining UFC fight predictions. By combining machine learning outputs with carefully considered heuristics, it addresses some of the blind spots inherent in purely data-driven models.

This approach offers several advantages:

- It provides a structured method for incorporating domain expertise into ML predictions.

- It allows for transparent adjustments to confidence levels based on specific fight factors.

- It generates detailed reports that can inform more nuanced betting strategies.

However, it’s important to note that this system is not a magic solution. The world of MMA is inherently unpredictable, and no model can account for all variables.

As I continue to refine this system, I’ll be closely monitoring its performance across various events and fight conditions. Future iterations may incorporate additional heuristics, more sophisticated weighting mechanisms, or even feedback loops to adjust the system based on outcomes.

Ultimately, the goal is to provide a more complete picture of each fight, empowering you to make more informed decisions.

Good Luck!