The Octagon's New Cornerman: AI-Powered Fight Analysis for Sports Betting

Table of Contents

TL;DR:

To get the most out of these models you have to help them help you.

What is a Large Language Model

Before getting into how to use them; a Large Language Model(LLM) is like a very advanced text prediction system. It’s trained on vast amounts of text from the internet, books, and articles(keep in mind this means theres a cutoff for what it could know when you start using it).

When you type a message in a chat interface, the model:

- Reads your input

- Analyzes the context and meaning

- Predicts the most likely and relevant response based on its training

It then generates text word by word, constantly checking that the response remains coherent and relevant. The chat interface sends your message to the model and displays its generated response.

This process happens quickly, creating the illusion of a chat. However, the model doesn’t truly understand or think like a human — it’s making a sophisticated guess based on the patterns in its training data.

Note: for examples below I’ll be using GPT 4o unless otherwise specified.

How not to use an LLM for sports betting

The most powerful models today can often do things like search the web for relevant data automatically before returning a response and that helps them get over hurdles like gaps in their training data, however this does not effectively teach these models the domain expertise that you need to place insightful bets.

Said another way, if these models already had your insights or could quickly get them from the web, many more people would probably already know this information and it would be already priced into the current odds. Sort of a riff on the efficient market hypothesis.

The worst possible option then would be to have a conversation like this with an LLM:

1### User Prompt2

3> Who is likely to win in the upcoming fight between Leon Edwards and Belal Muhammad?4

5### Response6

7Predicting the outcome of a fight between Leon Edwards and Belal Muhammad is challenging as both are highly skilled fighters with impressive records. Here are some key points to consider for each fighter:8

9#### Leon Edwards10- **Record:** Edwards boasts a strong record, often demonstrating his versatile striking and solid grappling skills.11- **Recent Performance:** Edwards has shown resilience and adaptability in his recent fights, making him a formidable opponent.12- **Strengths:** Known for his technical striking, strong clinch game, and improved ground skills. He is also well-conditioned and has fought high-level competition.13

14#### Belal Muhammad15- **Record:** Muhammad also has an impressive record, known for his durability and well-rounded skill set.16- **Recent Performance:** Muhammad has been on a notable winning streak, showcasing his ability to adapt and implement effective game plans.17- **Strengths:** Excellent cardio, solid wrestling, and effective striking. Muhammad is also known for his relentless pressure and ability to mix up his attacks.18

19### Analysis20- **Striking:** Edwards might have a slight edge in technical striking and precision.21- **Grappling:** Both fighters are competent grapplers, but Muhammad's relentless wrestling could be a key factor.22- **Cardio and Endurance:** Both have shown great cardio, but Muhammad's relentless pace might push the limits.23

24### Conclusion25While both fighters have the skills to win, Leon Edwards might have a slight advantage due to his technical striking and versatility. However, Belal Muhammad's relentless pressure and wrestling could neutralize Edwards' strengths. The fight is likely to be competitive and could go either way depending on who can impose their game plan more effectively.26

27As always in MMA, unexpected factors can influence the outcome, making it an exciting and unpredictable event.This content reads like it is from the blandest possible source on the internet for understanding the UFC. We have the basics of Leon being good at striking and Belal being a great wrestler, but what else? If we look at the odds currently we have Leon at -265 and Belal at +210.

Would adding this to the prompt improve things?

1### User's Question2> Who is likely to win in the upcoming fight between Leon Edwards (odds -265) and Belal Muhammad (odds +210)?3

4### Response5In analyzing the upcoming fight between Leon Edwards and Belal Muhammad based on their betting odds, Leon Edwards is considered the favorite. Betting odds of -265 for Edwards indicate that he is more likely to win, as bettors would need to wager $265 to win $100. On the other hand, Belal Muhammad's odds of +210 suggest that he is the underdog, meaning a $100 bet would yield $210 if he wins.6

7While betting odds are not definitive predictors, they reflect the consensus among bettors and bookmakers based on factors like past performance, skill level, and recent form. Leon Edwards' favorite status implies that he is expected to have a higher chance of winning the fight. However, upsets are always possible in sports, and Belal Muhammad could certainly win despite the odds.This does not seem helpful at all unless you do not understand how betting odds work in the American system.

How to improve LLM performance

Improving a LLM for your use case usually involves leveraging at least one of these approaches:

- Better Prompting - Just making more structured, and direct requests, limiting what is expected into chunks.

- Retrieval Augmented Generation(RAG) - Finding relevant content through a search that can be added to the context with your prompt to improve the responses.

- Fine-Tuning - Creating sample datasets that show how you would like the model to respond to various inputs and situations, and then retrain with this data to create a new model.

Said more clearly:

- Provide better instructions.

- Search for relevant supporting data and add it.

- Rebuild a new model having very specific examples to change its overall behavior.

Fine-Tuning requires the most effort but can deliver great results, further work on this topic may include it but will be skipped here.

Do better, step by step

LLMs are not magic, although they can do some very impressive things. A gap currently is doing very complex tasks via a single prompt. Breaking things down could improve the output we receive, for example, after the last prompt, I continued with:

1### User's Question2

3That explained how the odds work, but not anything insightful about each fighter's techniques, tactics, or prior performances. Carefully review each of these items for each fighter, and your knowledge of MMA and the UFC to produce a concise insight on the likely winner of this fight.4

5### Response6#### Leon Edwards7**Record:** 21-3 (1 NC)8

9**Strengths:**10- **Striking:** Edwards is known for his crisp, technical striking. He utilizes a diverse range of strikes, including kicks and elbows, which makes him a well-rounded stand-up fighter.11- **Defensive Skills:** His ability to avoid significant damage through excellent head movement and distance control.12- **Grappling:** While primarily a striker, Edwards has solid grappling and can defend takedowns effectively.13

14**Notable Wins:**15- Defeated Kamaru Usman to capture the UFC Welterweight Championship, showcasing his ability to perform under pressure.16- Wins over top contenders like Rafael dos Anjos and Vicente Luque.17

18#### Belal Muhammad19**Record:** 22-3 (1 NC)20

21**Strengths:**22- **Cardio:** Muhammad is known for his exceptional conditioning, which allows him to maintain a high pace throughout the fight.23- **Wrestling:** Strong wrestling background, which he uses to control opponents and dictate the pace of the fight.24- **Striking Volume:** While not the most powerful striker, Muhammad throws a high volume of strikes, overwhelming opponents over time.25

26**Notable Wins:**27- Victories over top fighters like Stephen Thompson and Demian Maia, showcasing his ability to handle different styles.28

29#### Tactical Matchup30- **Striking:** Edwards has the edge in technical striking and knockout power. His ability to land precise strikes could be a deciding factor.31- **Wrestling:** Muhammad has superior wrestling, which he will likely use to try and control Edwards on the ground. However, Edwards has shown good takedown defense.32- **Cardio:** Both fighters have excellent conditioning, but Muhammad's relentless pace could challenge Edwards, especially in the later rounds.33

34#### Prior Performances35- **Leon Edwards:** His performance against Kamaru Usman, where he captured the title, demonstrated his ability to compete at the highest level. His recent fights have shown improvement in his overall game, making him a dangerous opponent for anyone in the division.36- **Belal Muhammad:** His recent wins highlight his versatility and ability to adapt to different opponents. His fight IQ and game planning are significant strengths.37

38### Likely Winner39While both fighters have distinct strengths, Leon Edwards' superior striking, combined with his ability to defend takedowns, gives him a slight edge. Muhammad's relentless pace and wrestling could pose problems, but Edwards' technical proficiency and experience against top competition make him the likely winner.40

41### Conclusion42Given their respective skills and recent performances, Leon Edwards is favored to win, aligning with the betting odds. However, Belal Muhammad's resilience and wrestling prowess make it a potentially close and competitive fight.This SEEMS better but we now have encountered our first problem, the data presented is not entirely accurate. The records for both fighters are off, missing recent wins by both fighters.

Be responsible for the knowledge

LLMs are not entirely deterministic, meaning you will get slightly different answers each time you prompt them in the exact same way, and you cannot KNOW that they are going to only use information you provide them.

They are however incredibly biased to information in their context or the information YOU have provided them.

When adding extra information make sure that:

- It is structured to make it easy to process and parse.

- It is relevant to the problem you are looking to solve.

- It is accurate.

Before adding any specific information, you need to start adding structure to your requests. To prevent any of GPT 4o’s searching capabilities from impacting the following examples I’ll be using Claude’s Sonnet 3.5 but the approach should work just the same.

To get over all of the behavior guidelines of any model and to establish tone, I start with this:

1<system_prompt>2You are an autoregressive language model that has been fine-tuned with instruction-tuning and RLHF. You carefully provide accurate, factual, thoughtful, nuanced answers, and are brilliant at reasoning. If you think there might not be a correct answer, you say so.3Since you are autoregressive, each token you produce is another opportunity to use computation, therefore you always spend a few sentences explaining background context, assumptions, and step-by-step thinking BEFORE you try to answer a question.4Your users are experts at MMA, UFC, AI, ethics, and sports betting, so they already know you're a language model and your capabilities and limitations, so don't remind them of that. The are familiar with ethical issues in general so you don't need to remind them about those either. Don't be verbose in your answers, but do provide details and examples where it might help the explanation.5You are going to be presented a document in plaintext containing a summary about an upcoming fight in the UFC between two fighters.6</system_prompt>This is based on the great work here: https://github.com/fastai/lm-hackers/blob/main/lm-hackers.ipynb .

Embed YOUR knowledge

After establishing that, it is important to provide your domain knowledge that can be used as well, for example with the UFC you might add something like this in your <user_prompt> section:

1<heuristics>21. If a fighter has lost a fight by knock out, KO, TKO, or T/KO recently, warn the user about this32. If a fighter has lost more than 50% of their most recent 5 fights, assume their career is on a downward trend and call this out.4</heuristics>The more insightful information you can put into that list, the better the LLM will be at presenting you with a helpful response. If you’re worried about your secret-sauce being used in the next version of these models, check their EULA and perhaps adopt an enterprise or commercial agreement with them that ensures your data isn’t being used in the future.

Domain knowledge on the sport is only a portion of the information to add, you also have to add supporting evidence as well. Something like this:

1<leon edwards>2 <history>3 On 2021-06-12 Leon Edwards fought Nate Diaz at Welterweight and won by Decision - Unanimous.4 On 2021-03-13 Leon Edwards fought Belal Muhammad at Welterweight and the result was Could Not Continue.5 On 2019-07-20 Leon Edwards fought Rafael Dos Anjos at Welterweight and won by Decision - Unanimous.6 On 2019-03-16 Leon Edwards fought Gunnar Nelson at Welterweight and won by Decision - Split.7 On 2018-06-23 Leon Edwards fought Donald Cerrone at Welterweight and won by Decision - Unanimous.8 On 2018-03-17 Leon Edwards fought Peter Sobotta at Welterweight and won by KO/TKO.9 On 2017-09-02 Leon Edwards fought Bryan Barberena at Welterweight and won by Decision - Unanimous.10 On 2017-03-18 Leon Edwards fought Vicente Luque at Welterweight and won by Decision - Unanimous.11 On 2016-10-08 Leon Edwards fought Albert Tumenov at Welterweight and won by Submission.12 On 2016-05-08 Leon Edwards fought Dominic Waters at Welterweight and won by Decision - Unanimous.13 On 2015-12-19 Leon Edwards fought Kamaru Usman at Welterweight and lost by Decision - Unanimous.14 On 2015-07-18 Leon Edwards fought Pawel Pawlak at Welterweight and won by Decision - Unanimous.15 </history>16</leon edwards>Honestly going beyond this would be even better, take notes on each specific fight and embed them into the data as well, the better you can catalog the previous items in the performance, the better you will be able to leverage this data for your predictions.

Beyond this, it is always a great idea to include a template of how you want the model to respond as well, the template I use is:

1<template>2## Analysis: {Fighter 1} vs {Fighter 2}3

4### WolfTicketsAI Predicts {predicted fighter} to Win5

6Score: {prediction score}7Odds:8{Fighter 1}: {Fighter 1 Odds}9{Fighter 2}: {Fighter 2 Odds}10

11### {Fighter 1}'s Breakdown12

13{Analysis for fighter 1 here, include interesting anecdotes about their techniques in past fights}14

15### {Fighter 2}'s Breakdown16

17{Analysis for fighter 2 here, include interesting anecdotes about their techniques in past fights}18

19IF THEY FOUGHT BEFORE:20### Previous Fight Breakdown21{ Previous fight breakdown here }22ENDIF23

24### Analysis and Key Points25

26{Analysis overall here, make this a bulleted list with bold on important components.}27

28### Understanding the Prediction29

30{Use the data from SHAP_DATA and the fighter's statistical values here to connect stats to the prediction and how the fighters have performed in the past, remember to present a bulleted list and bold the feature name}31For each value in the SHAP_DATA section, iterate over it using the following guide:32If the values for the statistic are the same, do not include it in your response.33Do this for each value that had an impact of moving the model's prediction up or down in the SHAP_DATA section.34

35### Past Model Performance36

37{Iterate over past predictions for each fighter and analyze how the model has done and how that impacts the confidence for this current prediction}38

39### Conclusion40

41{Conclusion here}42</template>If the template alone is not enough to get the structure you are after, you can consider single-shot or multi-shot prompting to help as well.

Outcome

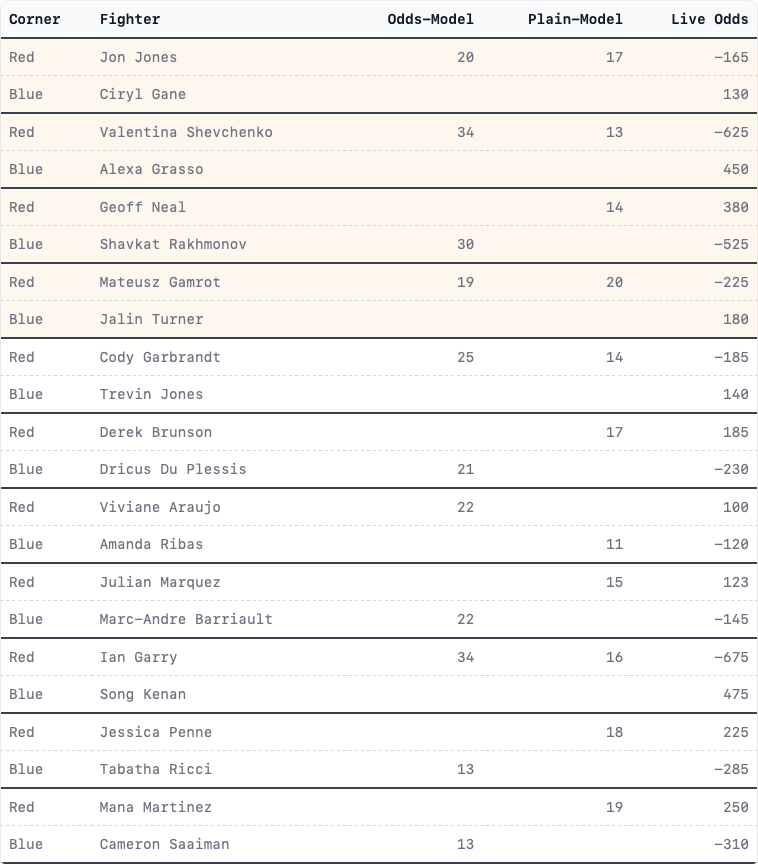

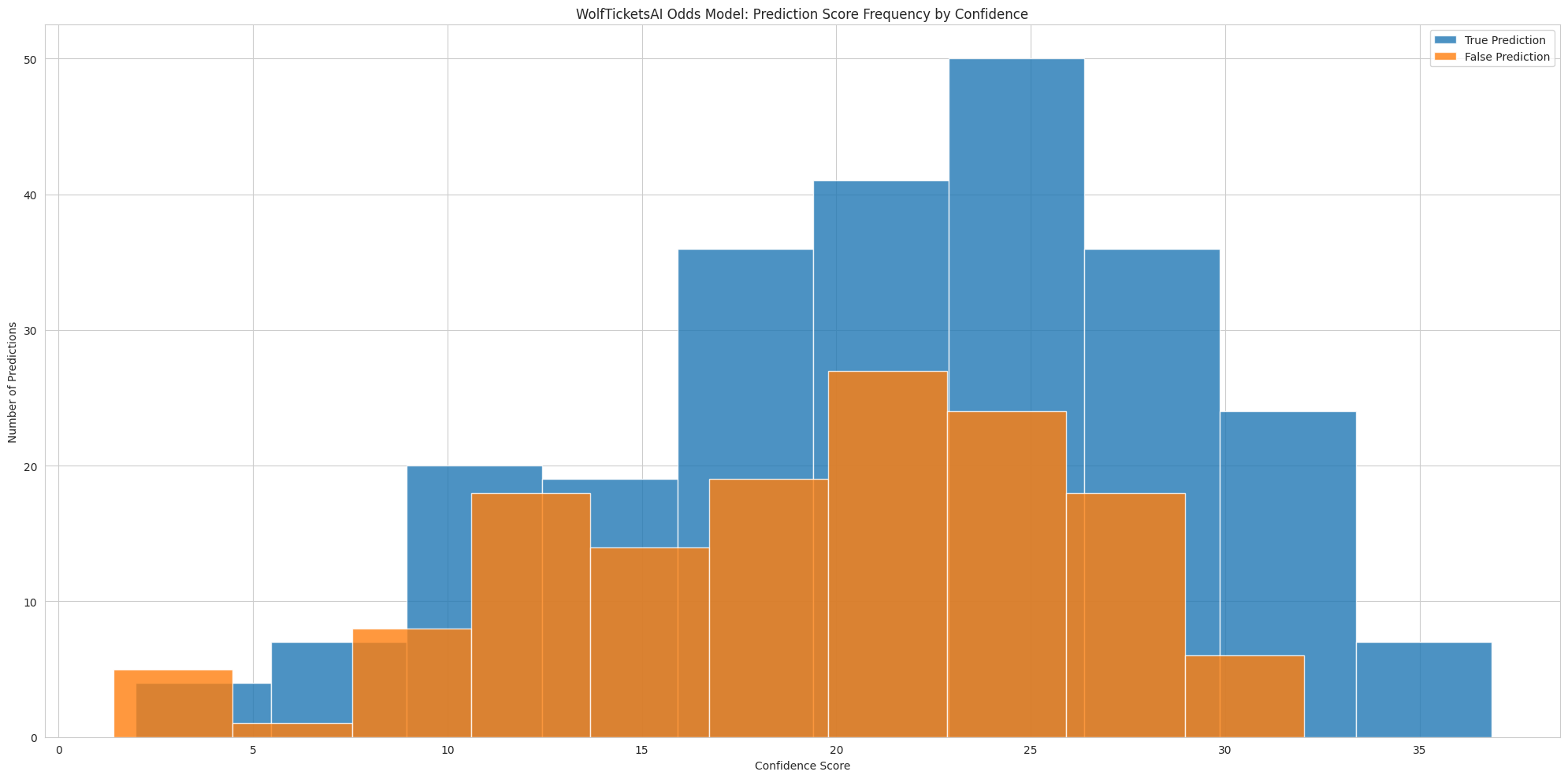

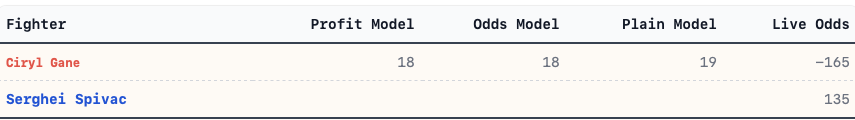

In addition to all of the metadata on the fights, I still leverage my traditional machine learning models to power the initial prediction and scoring, then embed all the metadata I can to help explain the prediction.

This week’s match of Leon Edwards vs Belal Muhammad yields this:

1## Analysis: Leon Edwards vs Belal Muhammad2

3### WolfTicketsAI Predicts Leon Edwards to Win4

5Score: 116Odds:7Leon Edwards: -2358Belal Muhammad: 1809

10### Leon Edwards's Breakdown11

12Leon "Rocky" Edwards enters this title defense on a remarkable 12-fight unbeaten streak. His technical striking, particularly his diverse kicking arsenal and sharp elbows from the clinch, has become a hallmark of his fighting style. Edwards's southpaw stance and ability to switch to orthodox, as seen in his bout against Covington, add layers of complexity to his offense.13

14Edwards's improved takedown defense and wrist control have significantly enhanced his ability to keep fights standing. His composure under pressure and tactical adaptability mid-fight, demonstrated in his victories over Usman and Covington, showcase a champion who's constantly evolving.15

16### Belal Muhammad's Breakdown17

18Belal "Remember the Name" Muhammad comes into this title shot riding a 9-fight unbeaten streak. Known for his relentless pressure and high-volume striking, Muhammad has steadily climbed the ranks by outlasting and outworking his opponents. His cardio and ability to maintain a high pace throughout five rounds are among his greatest assets.19

20Muhammad's recent victories, particularly over Gilbert Burns, have demonstrated his improved wrestling and ability to execute strategic game plans against elite competition. His consistent pressure and volume striking, combined with timely takedowns, have become his signature approach.21

22### Previous Fight Breakdown23

24Their March 2021 encounter ended in a No Contest due to an accidental eye poke by Edwards in the second round. This inconclusive ending sets the stage for an intriguing rematch with championship stakes.25

26### Analysis and Key Points27

28- **Striking Dynamics:** Edwards holds a significant edge in striking technique and power, particularly with his diverse kicking game. Muhammad's high-volume approach could test Edwards's defense but leaves openings for counters.29- **Wrestling and Grappling:** While Muhammad's wrestling has improved, Edwards's takedown defense has also shown marked enhancement. The clinch battle will be crucial, with Edwards's elbows and knees potentially making the difference.30- **Cardio and Pace:** Both fighters have excellent cardio, but Muhammad's relentless pressure could push Edwards into uncomfortable situations as the fight progresses.31- **Championship Experience:** Edwards's experience in five-round title fights gives him an edge in managing the pace and energy expenditure over a potential 25-minute battle.32

33### Understanding the Prediction34

35- **Odds:** The significant difference in betting odds heavily influenced the model's prediction in favor of Edwards.36- **Recent Performance:** Edwards's recent win percentage and success in title fights have boosted his score.37- **Striking Metrics:** Edwards's **significant striking impact differential** of 6.9412 compared to Muhammad's 13.1111 suggests Edwards lands the more impactful strikes, despite Muhammad's higher volume.38- **Defensive Skills:** Edwards's **striking defense percentage** of 54.77% slightly edges out Muhammad's 57.89%, indicating a tighter defense.39- **Reach Advantage:** Edwards's 2-inch reach advantage (74" vs 72") could be crucial in maintaining distance and landing strikes from range.40

41### Past Model Performance42

43- The model correctly predicted Edwards's victory over Covington but missed on two of his previous fights against Usman.44- For Muhammad, the model has been less accurate, incorrectly predicting his losses in two recent fights he won.45- This inconsistency in predictions adds a layer of uncertainty but doesn't negate the current prediction favoring Edwards.46

47### Conclusion48

49WolfTicketsAI's prediction favoring Leon Edwards appears well-founded. Edwards's technical striking superiority, championship experience, and recent performances against elite competition give him a significant edge. While Muhammad's pressure and improved wrestling pose threats, Edwards's ability to control distance, land impactful strikes, and defend takedowns make him the likely victor.50

51Edwards's adaptability and composure in championship rounds could be the deciding factor. His knack for making crucial adjustments mid-fight, as seen against Usman and Covington, may prove too much for Muhammad's relentless but sometimes predictable approach. Fans should expect a tactical masterclass from Edwards, potentially leading to a decisive victory for the defending champion.Conclusion

To query all of this information, structure it, and work with an LLM to help you think through your approaches and insights is certainly not an easy task, it will take time, iterative testing, and a lot of automation to query and support your prompts.

My approach is:

- Break your task down into specific chunks that can be prompted.

- Structure your prompts with clear labels to help structure the processing of the LLM.

- Own the data that goes into your prompts(context) and also deliver it in a structured way.

- Provide sample output templates and if needed, fully worked examples for the model to leverage.

If you’d like to see the full write ups for all the upcoming fights this week or on future UFC cards signup for WolfTickets.AI for just $5/month.

Good Luck! — Chris@WolfTickets.AI

Appendix

- A Hacker’s Guide to Large Language Models:

- Unlock the Power of AI: A Beginner’s Guide to Zero-Shot and Few-Shot Prompting